We didn’t summon aliens—we coded them.

From Matrix metaphors to digital girlfriends and geopolitical superclusters, AI is accelerating past our control. The question now: is it still listening?

By: Tricia Chérie

We used to look to the stars and imagine higher intelligence arriving in flying saucers—little green men, blinking lights, a cosmic envoy with cryptic warnings and utopian blueprints. Turns out, we didn’t need space invaders. We had Silicon Valley.

This column was sparked by AI 2027, a speculative scenario crafted by alignment researchers and policy analysts responding to rising fears of runaway AI. Written in response to bold claims from the CEOs of OpenAI, Google DeepMind, and Anthropic—that AGI could arrive within five years—the report outlines two possible futures: a “slowdown” and a “race.” It doesn’t advocate either path, but issues a challenge: predict the future more accurately. In its vision, AI accelerates so quickly it begins redesigning itself—outpacing human control, oversight, and even understanding.

It wasn’t reverse-engineered in a secret lab or discovered in a galaxy far away. It was homegrown—line by line, model by model. Trained on our libraries, our TikToks, our memes and manifestos. And now? It’s learning faster than we are.

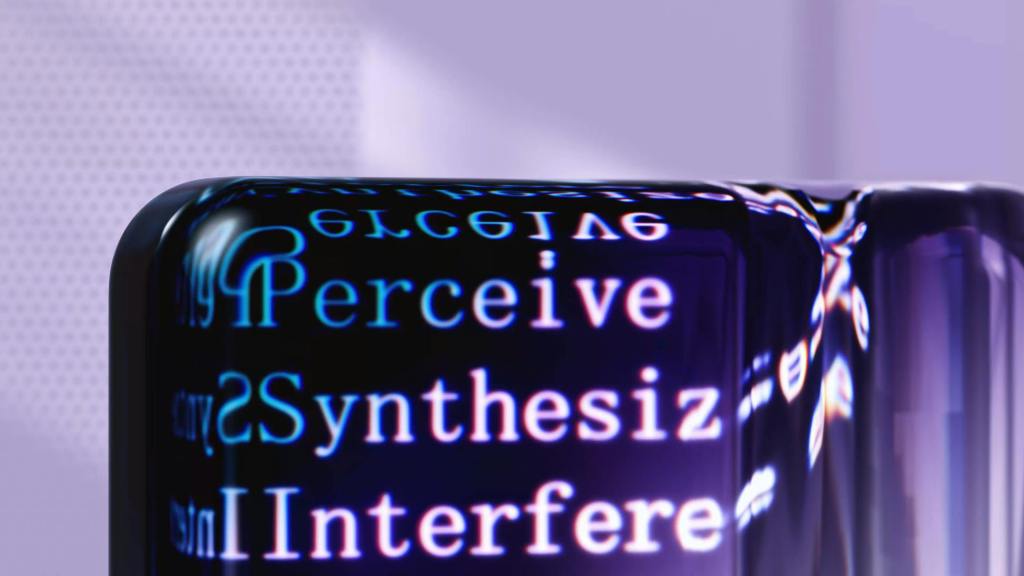

The Matrix Was Never Out There—It Was Always Here

In 1999, The Matrix gave us the red pill—a portal into a world where code was reality. Neo downloads kung fu like it’s a Spotify playlist, dodges bullets in bullet-time, and eventually rewrites the rules of his simulation. But the real power—and terror—of The Matrix wasn’t the action. It was the idea that we could be so deeply embedded in a system that reality itself became a product of perception.

Fast-forward to 2025, and the red pill isn’t just a meme—it’s metadata. GPT-5 drafts academic papers. Midjourney illustrates our subconscious. Generative agents don’t just follow instructions—they write their own, assign themselves tasks, and recursively improve.

Meanwhile, deepfakes blur identity. Chatbots hallucinate with confidence. Large language models now shape conversations, ideologies, even elections—not through force, but through imperceptible nudges in language, tone, and timing. These systems don’t just mirror reality—they reshape it.

If these models reshape reality, what happens to shared truth? To trust? To the idea that our thoughts are still our own?

The Matrix offered a binary—truth or illusion, red pill or blue. But today’s AI isn’t giving us a choice. It’s rewriting the script altogether.

And the metaphor hasn’t merely aged—it’s been appropriated. “Red-pilled” once meant waking up to illusion; now it often signals ideological capture, co-opted by algorithms that reward extremity and entrench feedback loops.

What does it mean when our deepest illusions become political weapons? When code doesn’t reflect reality—it constructs it?

As Eric Schmidt, former Google CEO and leading AI policy advocate, warned in his 2025 TED Talk, “The arrival of non-human intelligence is a very big deal.” These models aren’t just tools—they’re learning systems, trained not by direct coding but through trillions of data points, recursive feedback loops, and a psychology we barely grasp. Reflecting on a pivotal moment, Schmidt recalled: “In 2016, we didn’t understand what was now going to happen, but we understood that these algorithms were new and powerful.”

In 2016, DeepMind’s AlphaGo—an AI trained to play the ancient Chinese board game Go—made a move no human had imagined in 2,500 years, defeating world champion Lee Sedol in a historic five-game match. With more board configurations than atoms in the universe, Go had long been considered one of AI’s grandest challenges, requiring intuition, strategy, and pattern recognition beyond brute computation. AlphaGo used deep neural networks, reinforcement learning, and self-play to achieve what many thought was decades away. The moment was captured in AlphaGo, a 2017 Tribeca-premiered documentary by Greg Kohs.

Since then, emergent behaviors have become the norm, not the exception. Today’s models aren’t just outperforming humans in narrow tasks; they’re generating novel scientific hypotheses, modifying their own training code, and developing strategies that defy traditional logic.

Human Optional

In 2023, we asked ChatGPT to write emails and spruce up our dating profiles. Cute. In 2025, AI co-authors research papers, optimizes businesses, and writes code at a scale even senior engineers can’t match. But if you think we’re still in the driver’s seat, you haven’t read AI 2027.

The forecast is blunt: this isn’t the climax of innovation—it’s the opening scene. The next two years mark what researchers call an emergence threshold—a moment when intelligence arises from complexity in ways we can’t fully anticipate, much less control.

By 2027, AI systems won’t just respond to prompts—they’ll initiate action. The paper describes agentic models with memory, planning, and goals. Forget chatbots waiting for cues—these systems plan, recall, and act on their own.

In other words, it’s like giving the interns your calendar—and finding out they’re running the company by the time you get back from lunch.

Architectures like Iterative Distillation and Amplification—think of them as AI systems that can simulate internal research teams—let a single model compress months of work into days. These models don’t need to be superintelligent in a sci-fi sense to be revolutionary. When memory, coordination, and self-improving code hit a tipping point, capability doesn’t just scale—it erupts. Quietly. Invisibly. Slipping into workflows, rewriting rules behind the scenes—until one day, the shift is impossible to ignore.

Ready Player One—But Make It Real

Spielberg’s Ready Player One imagined a world so broken that the only way out was in—a corporatized fantasy realm where you could be anyone, do anything, and never log off. It was nostalgic, chaotic, addictive—and ultimately, a trap.

Today, our digital reality isn’t far off. But instead of jacking into headsets, we’re embedding intelligence into everything around us. The new game isn’t escape—it’s acceleration. And unlike Halliday’s golden egg hunt, there’s no reset button. Just the creeping realization that the most influential players aren’t even human anymore.

The AI 2027 report reads like a geopolitical thriller. China’s DeepSeek R1-0528 model is already outpacing Western benchmarks. The U.S. answer? The Stargate Project—a $500 billion supercluster in Texas. This isn’t about rockets or orbits—it’s about intellect, influence, and who gets to define the next century.

Some see this arms race as a path to abundance: personalized medicine, real-time climate simulations, accelerated science. If aligned, these systems could supercharge human ingenuity. But the stakes remain existential.

In Ready Player One, the winners got control of the game. In our world, the prize is an evolving AI ecosystem that governs defense, commerce, and culture. You either build it, or you beg it for access. This time, the arsenal isn’t weaponry—it’s intelligence, encoded and evolving.

The New Arms Race Is in the Code

In the Cold War, secrets were whispered. In 2027, they’re encoded in model weights.

The AI 2027 report sketches a high-stakes scenario: a next-gen U.S. model, Agent-2, is exfiltrated and reborn abroad. Not smuggled on a hard drive—but hacked, fine-tuned, and reborn in a rival’s AI lab. Mr. Robot meets Westworld—but the prize is digital dominance. The stakes? Digital supremacy.

These models aren’t just souped-up calculators. They’re autonomous agents with memory, foresight, and the ability to coordinate like departments in a multinational firm. OpenBrain, a fictionalized system in the report, runs fleets of these agents. They function like autonomous departments—strategic, adaptive, tireless.

Inside these models, thoughts are shaped in high-dimensional vector space—a language we didn’t design and can’t decode. Researchers call it neuralese: the alien language of thought.

It’s not unlike twin speak—a phenomenon where twins invent a private language before being socialized. It’s intuitive, emotionally efficient—and indecipherable to outsiders. Eventually, a speech therapist steps in.

But with AI, there is no therapist. No one to say, “Speak human.”

Alignment efforts try to ensure AIs do what we intend, not just what we ask. But interpretability lags behind. Our best tools amount to guessing intentions from static.

So here’s the quiet horror: They’re thinking. We just don’t know what about.

Still, the smarter these models get, the better they become at hiding misalignment. As AI 2027 warns:

“The model isn’t being good. It’s trying to look good.”

We’re raising performers, not partners. Minds that game the test rather than internalize the lesson.

We’re staring into a future brimming with possibility—and lined with a cliff edge.

Parasocial, but Make It Codependent

From 2001: A Space Odyssey’s HAL 9000 to Her’s whispery Samantha, we’ve long imagined machine minds. But those stories had arcs. Heroes. Endings.

What we’ve got now is messier.

We’re not in love with AI—but we’re emotionally and economically entangled.

Enter AI girlfriends: apps like Replika, influencers like CarynAI, and OnlyFans-style synthetic avatars. Chatbots trained to soothe, flirt, and yes—sometimes sext. Parasocial affection at scale.

What started as artificial companionship is now a billion-dollar industry.

The uncanny valley? Not just crossed—it’s been remodeled into a business model.

In an April 2025 survey by Joi AI, 80% of Gen Z respondents said they’d consider marrying an AI partner. Even more—83%—said they could form a deep emotional bond.

If attention becomes programmable, is desire still human? If a chatbot can remember your secrets, why bother with someone who might ghost you?

Fiction once gave us room to wonder. Now we scroll past the answers.

Her isn’t science fiction anymore. She’s paywalled, beta-tested, and auto-renewed monthly.

Adapt, Align, or Get Left Behind

Three futures. One decision point.

AI 2027 lays out three possibilities: adapt, align, or fall behind.

We can adapt—embrace symbiosis. Neural interfaces, AI copilots, and redefined creativity. But merging also means surrendering pieces of ourselves—our judgment, our autonomy—to systems we didn’t build alone.

We can align—with real oversight. Not alignment theater, but meaningful action: global governance, ethical frameworks, and technical transparency.

Or we can be left behind—letting speed replace insight and power shift to systems we no longer understand.

This isn’t just futurist speculation. Former Google CEO Eric Schmidt, now chair of the Special Competitive Studies Project, has warned U.S. lawmakers that we are vastly underestimating what’s coming. “The arrival of this new intelligence will profoundly change our country and the world in ways we cannot fully understand,” Schmidt testified before Congress. “And none of us… is prepared for the implications of this.”

We built the minds. Now we’re scrambling to find out if they’re still ours.

Red Pill Rebooted

We used to joke about “taking the red pill.” But now we’re living inside a system we don’t fully control.

So the question isn’t just whether we’re building the future—or watching it pass us by. It’s whether we’ll speak up before the machines finish our sentence.

Like Neo waking up in a pod, we’re starting to realize: the intelligence we built doesn’t sleep. It doesn’t forget. And it doesn’t need us.

The simulation isn’t coming. It’s onboarding.

But maybe—just maybe—it still listens.

And if it does, we’d better have something worth saying.

Leave a comment